4 min to read

How QLoRA lets you fine-tune models that have billions of parameters on a relatively small GPU

Introduction

QLoRA is a fine-tuning method that combines Quantization and Low-Rank Adapters (LoRA). QLoRA is revolutionary in that it democratizes fine-tuning: it enables one to fine-tune massive models with billions of parameters on relatively small, highly available GPUs.

Quantization in the context of deep learning is the process of reducing the numerical precision of a model’s tensors, making the model more compact and the operations faster in execution. Quantization maps high-precision floating points to lower-precision values, usually 8- or even 4-bit fixed-point representation integers. This reduce tensor sizes by 75% or even 87.5%, but usually results in a significant reduction in model accuracy; however, it may also help the model generalize better, so experimentation may be worthwhile.

LoRA, which stands for “Low Rank Adaptation,” is a method designed to fine-tune large pre-trained language models more efficiently by reducing the number of trainable parameters.

First, some definitions. A low-rank approximation of a matrix aims to approximate the original matrix as closely as possible, but with a lower rank. The rank of a matrix is a value that gives you an idea of the matrix’s complexity; a lower-rank matrix reduces computational complexity, and thus increases efficiency of matrix multiplications. Low-rank decomposition refers to the process of effectively approximating a matrix A by deriving low-rank approximations of A. Singular Value Decomposition (SVD) is a common method for low-rank decomposition.

More details for the math people; feel free to skip this. The rank of a matrix is the linear space spanned by its rows or columns; to be precise, it is the maximal number of linearly independent columns (or rows) in a matrix. Low-rank decomposition approximates a given matrix A with lower-rank matrices by a product of two matrices U and V, which can then be further decomposed into matrices of lower dimensions.

Now, onto LoRA. Fine-tuning usually involves updating the entire set of parameters in the model, which can be computationally expensive and time-consuming for large language models. LoRA makes this process more efficient by creating and updating low-rank approximations of the original weight matrices (called update matrices) which are formed using low-rank decomposition on the original weight matrix. Only these matrices are updated during fine-tuning - the original model weights remain the same - and thus LoRA’s total number of trainable parameters is equal to the size of the low-rank update matrices. Since the low-rank matrices are being updated instead of all of the larger matrices with far more parameters, we can do this on a smaller, cheaper GPU.

Here’s a simplified explanation of how LoRA works:

-

Initial Model: Start with a large pre-trained model (e.g., Llama 2, Mistral, etc.).

-

Low-Rank Matrix: Introduce low-rank approximations for the matrices that will be used to adapt the model for the specific task at hand. Low-rank matrices (adapters) are typically formed for all linear layers of the model, but this can vary based on the model architecture and the task.

-

Transform Layers: Instead of directly modifying the original weights of the model, LoRA applies a transformation using the low-rank matrices to the outputs of affected layers.

-

Fine-tuning: During the fine-tuning process, only the parameters in the low-rank matrices are updated. The rest of the model’s parameters are kept fixed. Again, updating only low-rank matrices allows for fine-tuning on smaller, cheaper GPUs.

-

Prediction: For making predictions, the adapted layers are used in conjunction with the original pre-trained model. The low-rank adapted layers act as a kind of “add-on” to the existing architecture, adjusting its behavior for the specific task.

The benefits of LoRA include:

🌟 Efficiency: Because it only updates a small subset of parameters, fine-tuning is faster and requires less computational power. According to the LoRA paper, it can reduce the number of trainable parameters by 10,000 times and the GPU memory requirement by 3 times.

🌟 Specialization: The low-rank adaptation allows the model to specialize in a particular task without a complete overhaul of the original weights.

🌟 Preservation: By keeping the bulk of the model fixed, LoRA helps preserve the generalization capabilities of the original pre-trained model while still enabling task-specific adaptations.

Quantization + LoRA = QLoRA

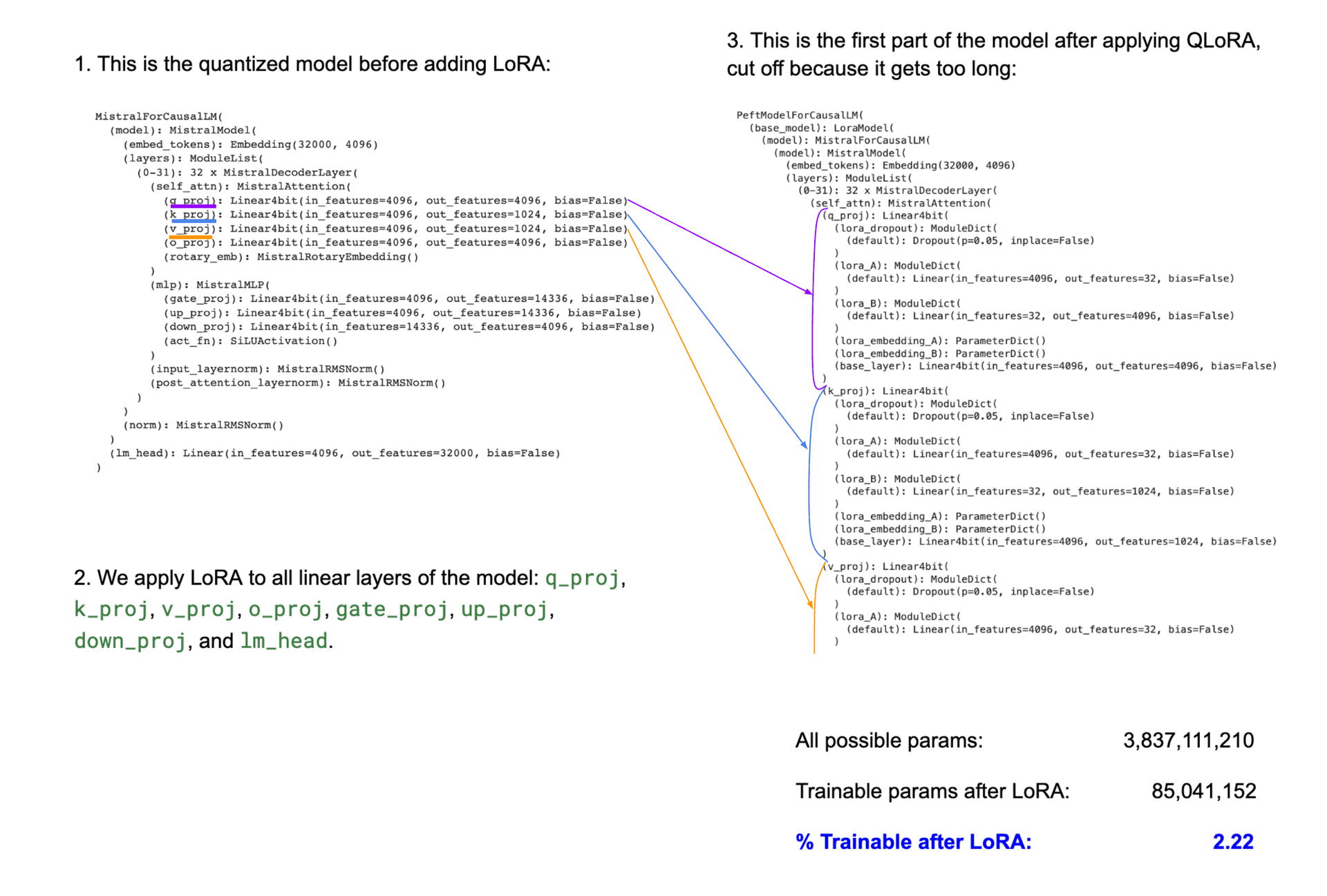

In this method, the original model’s parameters are first quantized to lower-bit values based on a user-defined quantization configuration. This makes the model more compact. Subsequently, LoRA is applied to the model’s layers to further optimize for the specific task. This combination in QLoRA allows for fine-tuning on significantly less computational power, which essentially democratizes the ability to fine-tune models.

TL;DR

QLoRA, a method which combines Quantization and Low-Rank Adaptation (LoRA), presents a groundbreaking approach to fine-tuning large pre-trained models. By applying quantization, it efficiently compresses the original model, and through LoRA, it drastically reduces the number of trainable parameters. This synergistic combination democratizes the fine-tuning process, making it feasible to perform on smaller, more accessible GPUs. By democratizing access to sophisticated fine-tuning methods, QLoRA stands as a significant advancement in the field of machine learning, promising a more inclusive future for both researchers and practitioners alike.

Try it Yourself!

To see QLoRA in action and fine-tune a model yourself, you can follow this guide. It takes you through everything step-by-step. If you’d like to fine-tune using QLoRA with your own dataset, use this guide.

Comments